14.2. Analysis of list operators¶

Conventional wisdom states that a list is faster than a vector

when operating on elements other than the back.

We know push_back() is \(O(1)\) for vector and we know

that vector doesn’t have a ‘push_front’, because it’s something

we intuitively feel we should discourage in a vector.

Every push front would involve shifting all the elements in the vector

down one position.

The table below shows the average complexity efficiency

of some basic list operations.

Note that many are constant time.

Note that many list operations such as insert and erase take an iterator

as a parameter.

Once you have the iterator, these operations take constant time,

however, getting the correct iterator can often take \(O(n)\),

if you have not saved the iterator from a previous operation.

Operation |

Complexity |

|---|---|

assignment = |

O(n) |

push_back() |

O(1) |

pop_back() |

O(1) |

erase(i) |

O(1) |

insert(i, item) |

O(1) |

insert(i, b, e) |

O(n) in the range

from |

splice() |

O(1) |

begin() |

O(1) |

end() |

O(1) |

size() |

O(1) or O(n) C++11 |

size() |

O(1) after C++11 |

Both vector and list support an insert() method.

There are multiple overloads for each and both support inserting

a range of elements at an arbitrary location in the container.

The following code shows the code for

inserting a range into a container.

template<class Container>

void test_insert(Container data, Container new_data){

data.insert(data.begin(), new_data.begin(), new_data.end());

}

The ref:test_insert code <lst_test_insert> inserts the range at the

beginning of the current data set.

This situation should benefit the linked list and handicap the vector.

This is one of the classic situations where linked lists are said to

outperform vectors.

Let’s insert chunks of data onto the front of both a list and a vector.

The following code shows what happens when an int

is stored in the containers.

In this example, we take increasingly large containers and insert increasingly large containers to their fronts.

Each insert operation creates a new vector with an initial size. The test function then inserts a second vector of equal size at position 0.

Both list and vector have similar complexity for this form of insert.

Both are linear in std::distance(first, last) - and vector has an additional

linear term in the distance between the insert position and end of the container.

(Vector has all those moves to perform).

Since we chose to insert at the first element location and force the destination

vector to resize on every insert, we really expect lists to outperform vector.

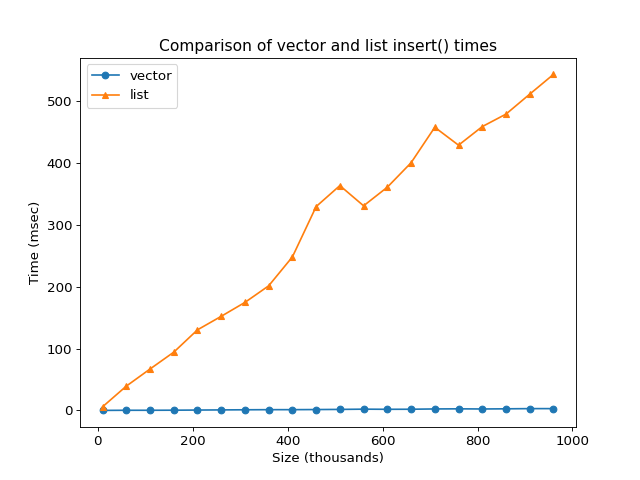

But it’s not even close. Running the previous code on values up to 1,000,000 should produce results similar to this:

Try This!

The online compiler is limited in both memory and time allowed.

Run this example on your own computer with larger values and compare.

It may not look like it, but both of these lines are both \(O(n)\) on the distance

over the size of the range inserted.

It’s just that for small types like int, the vector is on average 150 times

faster than the linked list.

How can this be?

In short: memory.

Recall that for a vector all the data resides in a single chunk of data. For a linked list, each new member lives in a separate location.

Computers have a feature called cache memory and it turns out the vector is able to exploit this resource better than a list.

What is Cache Memory?

Cache memory is a small amount of computer memory that provides high-speed data access to a processor and stores frequently used computer programs, applications and data. Cache memory is the fastest memory available and acts as a buffer between RAM and the CPU. When a processor requests data that already has an instance in cache memory, it does not need to go to the main memory or the hard disk to fetch the data. The processor checks whether a corresponding entry is available in the cache every time it needs to read or write a location which reduces the time required to access information.

Cache memory is relatively small - it is intended to speed access to frequently used data, not serve as a replacement for RAM. When the cache is full and something needs to be written, the least frequently used data is overwritten.

Although both keep their data on the free store, because the vector is a single chunk, the CPU has a better chance of keeping more of the data in cache memory.

In addition, it turns out that modern CPU’s are just very good at creating, copying, and moving chunks of memory.

There are situations where a list does outperform vector, we just have to work harder to see it.

To force our test containers to work harder, instead of a vector of int,

we create a type that is a simple array wrapper:

// a bloated class to make insert work harder

template<int N>

struct junk {

std::array<unsigned char, N> data;

};

Other than the change in type stored in the vector, nothing is different from the int

timing example.

vector<junk<2048>> vector_data (size);

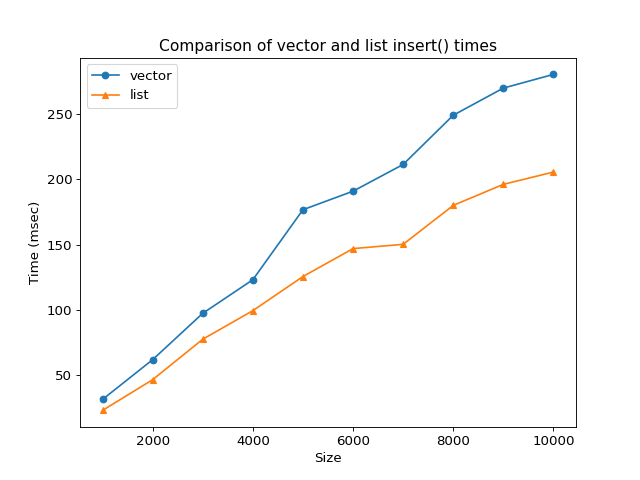

A change in data type stored in the vector produces different results.

The online compiler is limited in both memory and time allowed.

The 2MB value for JUNK_SIZE is roughly the ‘break even’ point on this compiler.

The graph below shows what running with and 8MB size looks like.

Run this example on your own computer with larger values and compare.

The sheer size of the data in each vector element increases the likelihood of a cache miss. In this case, the data is too large to fit much, if anything, in cache memory. The CPU fails to find it in cache, so it must retrieve it from RAM every time.

Both the vector and list are clearly \(O(n)\) and the list is outperforming the vector.

Try This!

What other situations might a list outperform a vector. Try some of the following with data types of different sizes:

Reversing data

Sorting data

Filling or constructing data

Removing data

More to Explore