16.6. Closed hashing¶

In closed hashing, the hash array contains individual elements rather than a collection of elements. When a key we want to insert collides with a key already in the table, we resolve the collision by searching for another open slot within the table where we can place the new key.

Each slot in the hash table contains a hash_entry, composed of

one data element and a status field indicating whether that slot is

occupied, empty, or deleted.

enum class hash_status { OCCUPIED, EMPTY, DELETED };

template <class T>

struct hash_entry

{

T data;

hash_status info;

hash_entry(): info(hash_status::EMPTY) {}

hash_entry(const T& value, hash_status status)

: data{value}

, info(status)

{}

};

The hash_set backing store is an array of hash_entry

objects.

template <class Key,

size_t N,

class Comparator=std::equal_to<Key>>

class hash_set

{

public:

using value_type = Key;

using key_type = Key;

hash_set() = default;

private:

array<hash_entry<Key>, N> table;

Comparator compare;

size_t sz = 0;

};

Collisions are resolved by trying a series of locations, \(p_0, p_1, p_2, \ldots p_{N-1}\) until we find what we are looking for. Each position is calculated as:

where:

hash(value)is the home slotf()is a function taking an integer number of tries and returns an integer offset.

How are new positions used in the hash table?

- Searching:

Try positions \(p_0, p_1, p_2, \ldots\) until we find the requested value or an

EMPTYslot.- Inserting:

Try positions \(p_0, p_1, p_2, \ldots\) until we find the same value, an

EMPTYslot, or aDELETEDslot. Put the new value in the position pound and mark the position asOCCUPIED.- Erasing:

Try positions \(p_0, p_1, p_2, \ldots\) until we find the requested value or an

EMPTYslot. If we find the value, then mark the position asDELETED.

Find takes a value of the hash_entry key type as a parameter

and returns the position of the value in the table.

It returns N if the value is not in the table.

size_t find (const Key& value) const

{

size_t hash = std::hash<Key>()(value);

size_t pos = hash % N;

size_t count = 0;

while ((table[pos].info == hash_status::DELETED ||

(table[pos].info == hash_status::OCCUPIED

&& (!compare(table[pos].data, value))))

&& count < N)

{

++count;

pos = (hash + next_slot(count)) % N;

}

if (count >= N || table[pos].info == hash_status::EMPTY) {

return N;

}

return pos;

}

The loop condition is fairly complicated and needs discussion. There are three ways to exit this loop:

We hit an

EMPTYspace (notDELETED, and notOCCUPIED)We hit an

OCCUPIEDspace that has the value we wantWe have tried

Ndifferent positions. (No place left to look!)

With find in place, other search operations are easy.

Simply call find and evaluate the results.

constexpr

bool contains (const Key& value) const noexcept

{

return find(value) != N;

}

int count (const Key& value)

{

unsigned pos = find(value);

return (pos == N) ? 0 : 1;

}

Note that since our set is still forcing a uniqueness constraint,

count will return only 0 or 1.

The code to remove elements is just as simple.

Easier than the erase we implemented for open hashing.

We try to find that element.

If found, we mark that slot

DELETEDand decrement the size.Otherwise, do nothing.

void erase (const Key& value)

{

unsigned pos = find(value);

if (pos != N) {

table[pos].info = hash_status::DELETED;

--sz;

}

}

Inserts are a bit more work, because they involve potentially looking for an open slot to store a value.

Because this is a set (and not a multiset) we first call find

to see if the value is already there.

bool insert (const Key& value)

{

size_t hash = std::hash<Key>()(value);

unsigned pos = find(value);

if (pos == N) {

size_t count = 0;

pos = hash % N;

while (table[pos].info == hash_status::OCCUPIED && count < N)

{

++count;

pos = (hash + next_slot(count)) % N;

}

if (count >= N) {

return false; // could not add, table is full

}

table[pos].info = hash_status::OCCUPIED;

table[pos].data = value;

++sz;

return true;

}

// else replace existing value

table[pos].data = value;

return true;

}

If not found (pos == N), then we need to find a slot.

The loop that does this is similar the find loop,

but unlike find, we stop at the first DELETED or EMPTY slot.

In the other searches, we had kept going past DELETED slots,

because the element we wanted might have been stored

after an element that was later erased.

But now we are only looking for an unoccupied slot to put something,

so either a slot that has never been occupied (EMPTY) or

a slot that used to be occupied but is no longer (DELETED) works.

The example contains #define statements you can use to change

how the next slot is found.

Try it with different hash table sizes to see how clumping changes with the different probing strategies.

16.6.1. Choosing the next slot¶

The function next_slot(n) in the find and insert functions

controls the sequence of positions that will be checked.

It is the implementation of the function \(f(n)\) mentioned earlier.

Recall the find function:

size_t find (const Key& value) const

{

size_t hash = std::hash<Key>()(value);

size_t pos = hash % N;

size_t count = 0;

while ((table[pos].info == hash_status::DELETED ||

(table[pos].info == hash_status::OCCUPIED

&& (!compare(table[pos].data, value))))

&& count < N)

{

++count;

pos = (hash + next_slot(count)) % N;

}

if (count >= N || table[pos].info == hash_status::EMPTY) {

return N;

}

return pos;

}

On our \(n_{th}\) try, we examine the position

where \(hash(value)\) always returns the home slot for any

hashed value.

This is the location that the value would be stored if currently unoccupied.

The f function computes an offset from the reference location.

The most common schemes for choosing the next slot are

linear probing,

quadratic probing, and

double hashing.

- Linear probing

- \[f(n) = n\]

If a collision occurs at location

pos, we next check locations \(pos+1 \pmod N, pos+2 \pmod N, pos+3 \pmod N, \ldots\) and so on.Because collisions get stored in a location originally intended for another hash code, values have a tendency to clump together in the hash table.

- Quadratic probing

- \[f(n) = n^2\]

If a collision occurs at location

pos, we next check locations \(pos+1 \pmod N, pos+4 \pmod N, pos+9 \pmod N, \ldots\) and so on.Because the jumps between slots increases as the number of tries increases, this function tends to reduce clumping (and results in shorter searches). ``But`` it is not guaranteed to find an available empty slot if the table is more than half full or if

Nis not a prime number.Note

Again, prime numbers!

Remember the earlier discussion about how \(% N\) tends to improve the key distribution when

Nis prime? You can see why it’s part of programming “folklore” that hash tables should be prime-number sized, even if most programmers can’t say why that’s supposed to be good. - Double hashing

- \[f(n) = n * h_2(value)\]

where \(h_2\) is an alternate hash code function.

If a collision occurs at location

pos, we next check locations \((pos+1*h_2(value)) \pmod N, pos+2*h_2(value)) \pmod N, pos+3*h_2(value)) \pmod N, \ldots\) and so on.This also tends to reduce clumping, but, as with quadratic hashing, it is possible to get unlucky and miss open slots when trying to find a place to insert a new key.

16.6.2. Analysis of closed hashing¶

We define \(\lambda\), the load factor of a hash table, as the number of items contained in the table divided by the table size. In other words, the load factor measures what fraction of the table is full. By definition, \(0 \le \lambda \le 1\).

Given an ideal collision strategy, the probability of an arbitrary cell being full is \(\lambda\).

Therefore, the probability of an arbitrary cell being empty is \(1 - \lambda\).

The average number of table elements we expect to examine before finding an open position is therefore \(\frac {1}{1-\lambda}\).

Since we never look at more than N positions,

given an ideal collision strategy, finds and inserts are on average

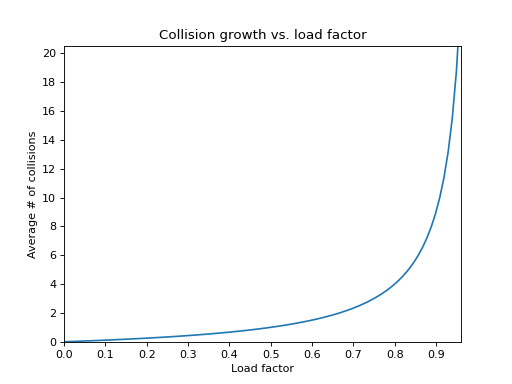

The graph shows how \(\frac {1}{1-\lambda}\) changes as \(\lambda\) increases.

If the table is less than half full (\(\lambda \lt 0.5\))

then we expect to try on average no more than 2 slots

during a search or insert.

Not too bad.

But as \(\lambda\) gets larger,

the average number of slots examined grows toward N.

As the table fills and sz approaches N, the performance

degenerates toward \(O(N)\) behavior.

Because of this, a general rule of thumb for hash tables is to keep them no more than half full. At that load factor, we can treat searches and inserts as \(O(1)\) operations. If we let the load factor get much higher, we start seeing \(O(N)\) performance.

No collision resolution scheme is truly ideal, so keeping the load factor low enough is even more important in practice than this idealized analysis indicates.

More to Explore

The content on this page was adapted from Resolving Collisions <https://www.cs.odu.edu/~zeil/cs361/f16/Public/collisions/index.html>, by Steven J. Zeil for his data structures course CS361.